Predictive Sales AI: Inputs, Models, Pitfalls

A practical guide to building reliable predictive sales AI: the inputs that matter, model choices, measurement, and common production pitfalls.

Predictive sales AI is supposed to answer simple questions like “Who should we talk to next?” and “Which deals are actually likely to close?” The hard part is that sales data is messy, incentives are misaligned, and the easiest-to-build models often produce the least trustworthy outputs.

This guide breaks predictive sales AI into three practical layers:

- Inputs (what data you need, and what “good” looks like)

- Models (what approaches fit common sales predictions)

- Pitfalls (why models fail in production, even when metrics look great)

What predictive sales AI is (and what it is not)

Predictive sales AI uses historical data to estimate the probability of a future sales outcome, for example:

- Will this lead become a Sales Qualified Lead (SQL) within 14 days?

- Will this outbound conversation produce a booked meeting?

- Will this opportunity close by end of quarter?

- Will this customer churn in the next 90 days?

It is different from generative AI, which produces content (emails, call summaries, LinkedIn messages). Generative systems can create signals that feed predictive systems (for example, extracting intent from a message thread), but the predictive system still lives or dies on measurement, labels, and operational use.

A useful rule: if it does not change a decision (routing, prioritization, outreach, or staffing), it is not predictive sales AI, it is just analytics.

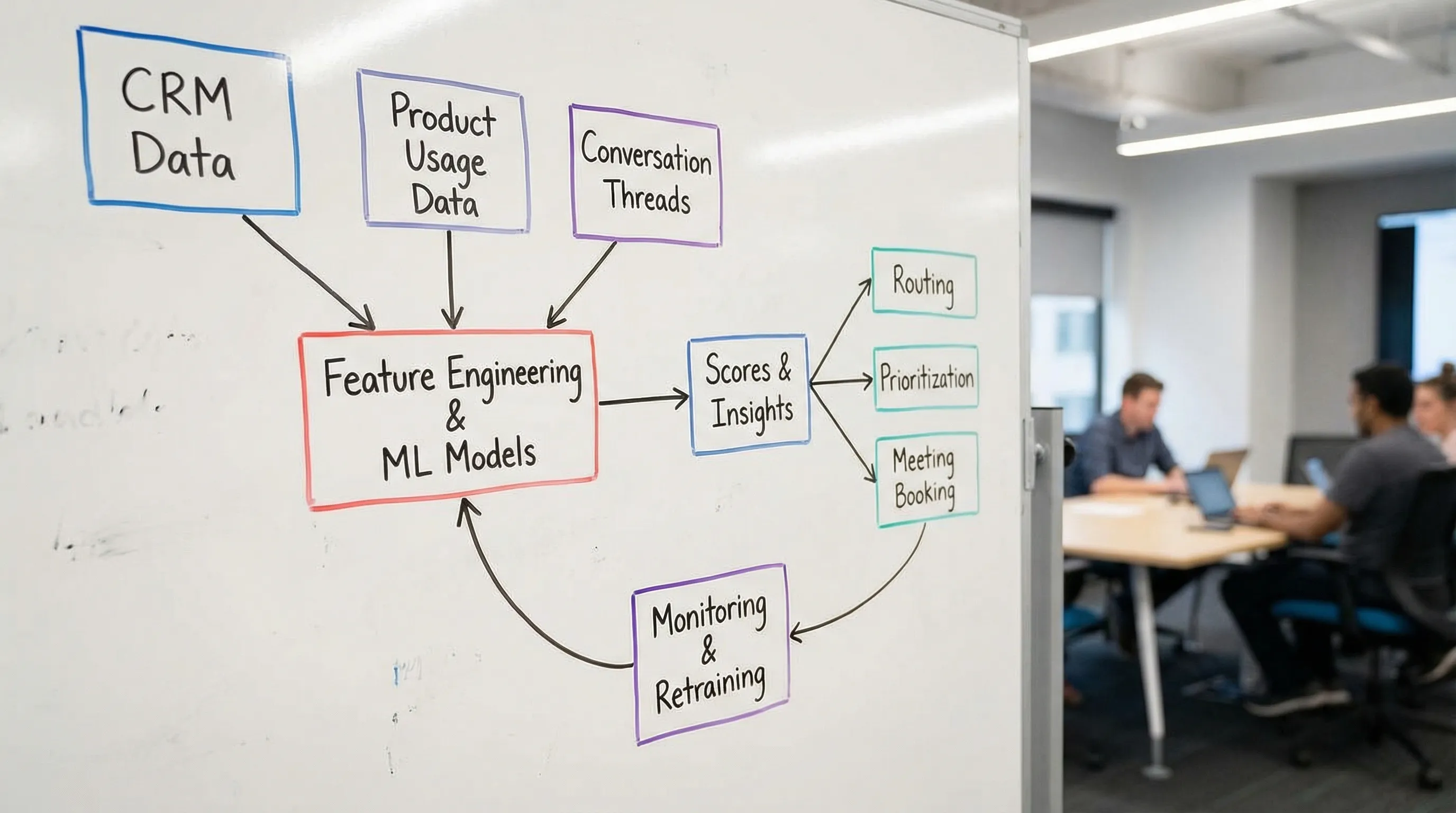

Inputs: the data that actually makes predictions work

Before choosing a model, define:

- Unit of prediction: lead, contact, account, opportunity, conversation thread, or customer.

- Time horizon: next 7 days, 30 days, this quarter, next 12 months.

- Action: what someone or something will do differently based on the score.

Then map the inputs you can reliably capture.

The practical input categories

Most predictive sales AI projects pull from the same buckets, but teams underestimate how often “having the data” differs from “having usable data.”

| Input category | Examples | Common failure mode | What “good” looks like |

|---|---|---|---|

| CRM lifecycle data | Lead source, stage history, opportunity amount, close dates | Stages updated late or inconsistently | Timestamped changes, clear stage definitions, required fields |

| Activity and touches | Emails sent, calls logged, meetings held, sequence steps | Activity inflation and missing logs | Instrumentation you trust and cannot easily game |

| Buyer intent signals | Form fills, pricing page views, webinar attendance | Intent is stale, attributed to wrong account | Identity resolution, recency weighting, clear triggers |

| Firmographics | Industry, headcount, region, tech stack | Overgeneralization and bias | Fields normalized, updated, and relevant to ICP |

| Product usage (PLG) | Activation events, feature adoption, seats used | Confusing correlation with causation | Cohorts, retention baselines, meaningful adoption milestones |

| Conversation signals | Reply sentiment, objections, qualification evidence | Subjective notes, inconsistent capture | Standardized evidence, extracted from the actual thread |

Why conversation data is becoming a core input

In many B2B motions, the biggest leading indicators happen inside conversations (not inside CRM fields). Examples:

- A prospect asks about pricing or timeline.

- They mention a tool you replace.

- They confirm they are evaluating vendors.

- They ask to loop in a stakeholder.

Those are predictive signals because they are closer to the buying decision than most “activity” data.

For LinkedIn-led outbound, conversation events like connection acceptance, reply, “qualified conversation,” and meeting booked often arrive earlier than opportunity creation. Platforms like Kakiyo are relevant here because they manage LinkedIn conversations and can standardize how conversation outcomes and qualification evidence are captured, which makes downstream scoring and prediction less dependent on rep-by-rep note quality.

Feature design: the “hidden work” that drives most lift

In sales, models often improve more from better features than from more complex algorithms. A few patterns that routinely matter:

- Recency and momentum: last activity date, trend of engagement over the last 7 days vs 30 days.

- Stage velocity: how quickly similar opportunities move, and whether this one is lagging.

- Account-level aggregation: multiple contacts engaging in the same account is usually more predictive than a single “hot lead.”

- Negative signals: “not a fit,” “already chose a vendor,” unsubscribes, ghosting after a pricing conversation.

If your system only captures positive signals, it will produce optimistic scores that sales teams learn to ignore.

Models: which approaches fit common sales predictions

You do not need deep learning to get value from predictive sales AI. In many commercial teams, simpler models win because they are easier to debug, calibrate, and govern.

A practical map of use case to model

| Prediction use case | Typical label (what you predict) | Common model families | What to optimize |

|---|---|---|---|

| Lead scoring | Becomes SQL within N days | Logistic regression, gradient-boosted trees | Precision at top band, calibration |

| Meeting likelihood (outbound) | Books meeting within N days | Gradient-boosted trees, ranking models | Lift vs baseline outreach |

| Opportunity win probability | Closed-won by close date | Gradient-boosted trees, calibrated classifiers | Calibration + accuracy by segment |

| Deal slippage risk | Close date moved or lost | Survival models, classification | Early warning recall |

| Churn risk | Cancels within N days | Survival models, gradient-boosted trees | Recall with actionability |

| Next best action | Choose action that increases conversion | Contextual bandits, uplift modeling | Incremental lift (not correlation) |

| Forecasting (time series) | Revenue next week/month/quarter | Time series + causal features, ensembles | Backtested error metrics |

A key distinction:

- Classification models tell you “likelihood.”

- Ranking models help you prioritize who to work next.

- Uplift / bandit approaches aim to answer “what should we do to change the outcome,” which is closer to true decision optimization.

Where LLMs fit in predictive sales AI

Large language models (LLMs) are increasingly useful for turning unstructured text into structured features, for example:

- Extracting whether timeline was mentioned.

- Detecting competitor names.

- Classifying objections or intent.

- Summarizing “evidence” from a thread.

But LLMs introduce new operational risks (inconsistency, hallucinated extraction, prompt drift). If you use them as feature generators, treat them like any other upstream dependency: version prompts, test for stability, and monitor for distribution shift.

For safety and auditability, many teams keep the final predictive model deterministic (for example, gradient-boosted trees) and use LLMs only to populate features.

Measurement: how to evaluate predictive sales AI without fooling yourself

Offline metrics are necessary, but not sufficient.

Offline metrics you should understand

- Precision and recall: especially at the top score band (the only part reps will work).

- PR-AUC (precision-recall area): often more informative than ROC-AUC in imbalanced sales outcomes.

- Calibration: whether a “0.70” score actually means about 70 percent likelihood for that segment.

- Lift vs baseline: how much better your top decile converts than random or existing routing.

Online metrics that prove business impact

What matters is whether the prediction changes outcomes:

- Meetings booked and held per rep-hour

- Qualified conversation rate

- AE acceptance rate (for SDR-sourced meetings)

- Opportunity creation rate from worked leads

- Pipeline created per worked lead

If you are scoring leads but cannot show downstream lift, the model is a dashboard, not a system.

Pitfalls: why predictive sales AI fails in production

Most failures are not “the model was wrong.” They are data, labeling, and adoption failures.

1) Label leakage (the silent killer)

Leakage happens when features include information that would not exist at prediction time. Common examples:

- Using “number of meetings” to predict “meeting booked.”

- Using a future stage field to predict earlier conversion.

- Including post-opportunity notes in lead scoring.

Leakage creates excellent offline metrics and terrible real-world performance.

2) Bad labels and inconsistent definitions

If “SQL” means different things across segments or managers, your model learns inconsistencies. This is especially common when:

- Reps mark leads as qualified to hit targets.

- Marketing and sales disagree on stage meaning.

- Sales stages are updated in batches at end of week.

A model cannot restore meaning that the organization has not agreed on.

3) Selection bias (training on a filtered reality)

Sales teams work some leads and ignore others. If you train only on worked leads, the model learns the bias of past routing, not true market response.

Mitigations include:

- Logging “unworked but eligible” leads.

- Running controlled experiments in routing.

- Using exploration (carefully) to collect data in underrepresented segments.

4) Activity gaming and proxy features

If your best predictors are “emails sent” or “calls made,” you may be predicting rep behavior, not buyer behavior. Teams then game the model by increasing activity, and quality drops.

Prefer features tied to buyer response (reply, meeting acceptance, intent signals) and pair activity with outcome metrics.

5) Segment mismatch (one model for many motions)

Outbound SMB and inbound enterprise do not behave the same. A single global model often underperforms and erodes trust.

Better patterns:

- Segment-specific models (by region, motion, ICP tier).

- One global model plus segment calibration.

6) Overfitting to a quarter that will never happen again

Promotions, pricing changes, product launches, and macro shifts all change behavior. A model trained on last quarter might be “accurate” but fragile.

This is why you need drift monitoring and a retraining plan, not just an initial build.

7) Lack of calibration (scores people misinterpret)

If a “90” score means 30 percent likelihood in one segment and 70 percent in another, the number becomes political. Calibration by segment matters because sales teams operationalize thresholds.

8) No path from score to action

A score without a decision rule becomes shelfware. Define:

- What happens at each score band (work now, nurture, route, disqualify).

- SLAs (how fast to act).

- Ownership (SDR, AE, marketing, partner).

9) “Set and forget” deployment

Predictive systems are products. They need:

- Monitoring (data health, drift, performance by band)

- Change control (feature changes, prompt versions if you use LLM extraction)

- Feedback loops (why false positives and false negatives happened)

NIST’s AI Risk Management Framework is a useful reference for structuring governance without turning it into bureaucracy.

10) Privacy, platform policies, and trust

Sales data includes personal data. Predictive systems must respect consent, retention, and platform terms. For US teams, understand your obligations under frameworks like the California Consumer Privacy Act (CCPA). For global teams, GDPR may apply.

Also consider internal trust: if the model is a black box that cannot explain “why,” reps will ignore it after a few bad calls.

A practical implementation approach (that avoids the common traps)

If you are building or buying predictive sales AI, use a narrow, operational pilot.

Step 1: Pick one prediction that maps to one workflow

Good starting points:

- “Meeting booked within 14 days” for outbound

- “SQL within 7 days” for inbound speed-to-lead

- “Opportunity will slip” for late-stage deals

Step 2: Write the label definition in one paragraph

Include:

- Inclusion and exclusion criteria

- The exact time window

- What counts as success

- What counts as “no” (including disqualifications)

Step 3: Start with a baseline model you can explain

Often that is logistic regression or gradient-boosted trees, with simple recency and evidence features. If you cannot explain the model drivers, you will struggle to operationalize it.

Step 4: Prove lift with a controlled rollout

Do not roll out to everyone at once. Compare:

- Teams using the score vs teams not using it

- Or weeks with the model vs weeks without

Step 5: Add better signals, not just more signals

Conversation evidence is often where the next step-function improvement comes from because it reflects real buyer intent.

If LinkedIn is a core channel for you, improving how conversation outcomes and qualification evidence are captured can materially improve prediction quality. Kakiyo is built around autonomous LinkedIn conversations, lead qualification, scoring, and analytics, which can make those conversation-level signals more consistent and usable across a team.

You can explore how Kakiyo approaches AI-driven conversations and qualification at kakiyo.com.