Qualified Leads: Scoring That Sales Trusts

A practical guide to building lead scoring sales actually trusts—explainable, outcome-driven, and operationalized with routing, SLAs, calibration, decay, and conversational evidence.

Most scoring systems don’t fail because the math is wrong. They fail because sales doesn’t trust them, so reps ignore the score, cherry-pick leads, or revert to “whoever replied last.” If you want more qualified leads (and fewer calendar fillers), your lead scoring needs to be: explainable, consistent, tied to real outcomes, and operationally useful in the moment a rep is deciding what to do next.

This guide walks through how to build scoring that sales trusts, including the minimum ingredients, the calibration process, and the workflow mechanics that turn a score into pipeline.

What “scoring that sales trusts” actually means

Sales trust is not about having a perfect model. It is about predictability + transparency + accountability.

A scoring system earns trust when:

- It matches reality often enough that reps feel it improves their day (better conversations, better meetings).

- It explains itself (the rep can see why this lead is “hot,” not just that it is).

- It is hard to game (nobody can inflate scores with empty clicks or low-signal activity).

- It triggers clear actions (who works it, when, how fast, and what “done” looks like).

- It improves over time with closed-loop feedback (not quarterly debates and finger-pointing).

If you want a clean foundation for definitions first, pair this with Kakiyo’s guides on MQLs and SQLs alignment and what an SQL is (with examples).

Step 1: Choose the right “truth” label (or your scoring will drift)

Before you score anything, decide what outcome the score is supposed to predict.

Common options:

- Sales-accepted lead (SAL): a human rep agrees it is worth pursuing.

- Sales-qualified lead (SQL): the lead meets qualification criteria and is ready for an AE.

- Meeting booked: easy to measure, but risky if meetings are low-quality.

- Meeting held and accepted by AE: a stronger quality signal than “booked.”

- Opportunity created / pipeline: best long-term signal, but slower feedback.

For sales-trusted scoring, the sweet spot is usually:

- Primary label: AE-accepted meeting (or SQL that is accepted by sales)

- Secondary labels: meeting held, opportunity created

Why: it balances speed (you can learn within weeks) and quality (sales validates it).

One rule that prevents endless scoring arguments

If sales won’t commit to a definition, they won’t commit to the score.

Write your definition down in one paragraph and add 3 to 5 “examples and non-examples.” That single page becomes the anchor for scoring, routing, and dispute resolution.

Step 2: Use a scoring structure sales can understand in 10 seconds

The fastest way to lose trust is a score that feels like a black box. The fastest way to earn it is a score built on pillars reps already believe in.

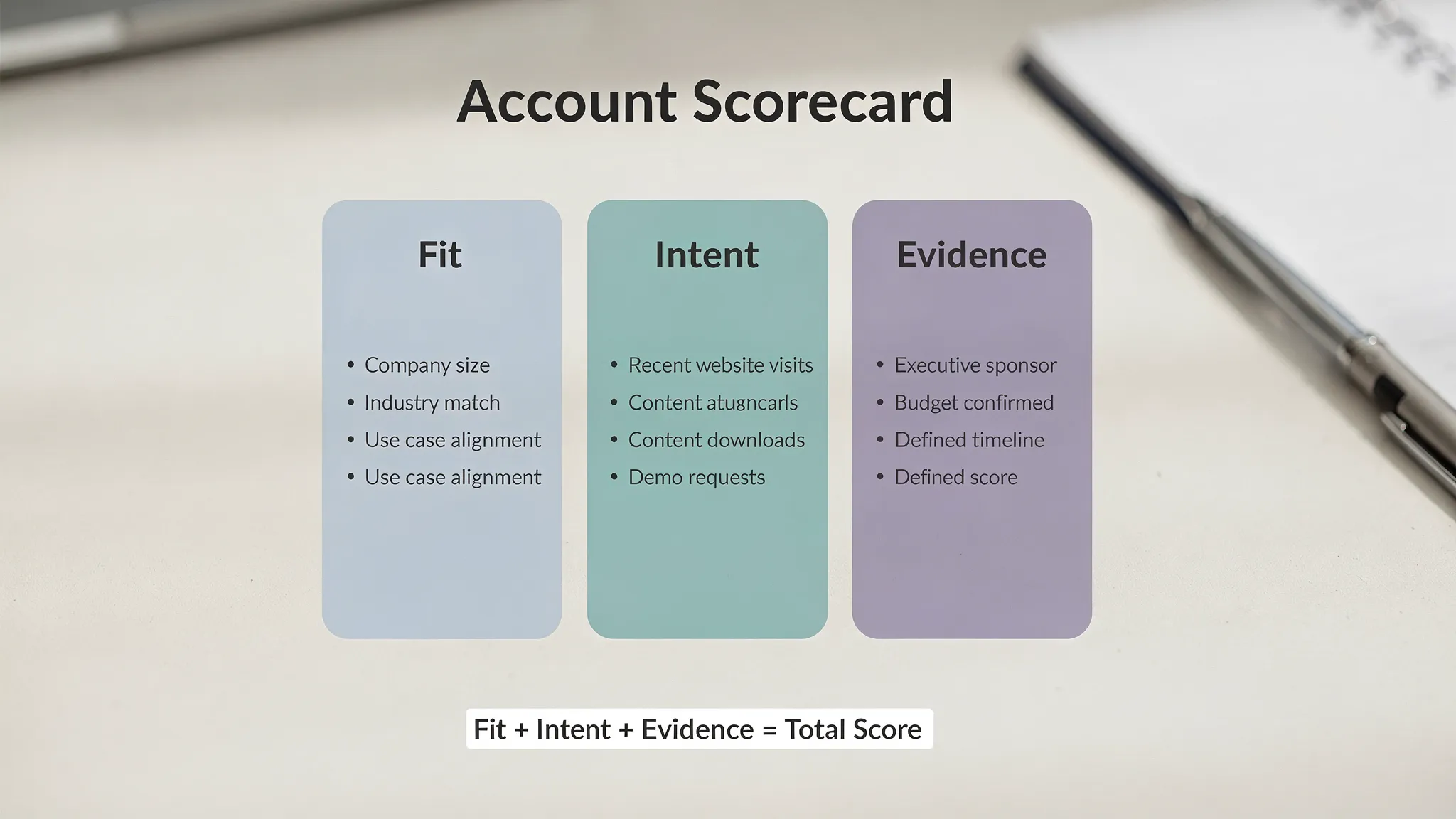

A practical structure that stays explainable is:

- Fit: are they the right kind of account/person?

- Intent: are they showing buying interest or urgency?

- Evidence: do we have proof inside the conversation that it is real?

“Evidence” is the trust lever most teams miss. Fit and intent can be inferred, but evidence is what prevents false positives.

Here’s a simple comparison of signals and why sales tends to trust (or distrust) them.

| Signal type | Examples | Sales trust level | Why it helps or hurts trust |

|---|---|---|---|

| Firmographic fit | ICP industry, company size, geo, tech stack | High | Stable, hard to fake, aligns to territory strategy |

| Role fit | Title, function, seniority | High | Reps know who buys and who blocks |

| Behavioral intent (low evidence) | Page views, email opens, ad clicks | Medium to low | Often noisy, easy to game, varies by channel |

| Behavioral intent (high evidence) | Pricing page view + demo page view in same week | Medium to high | Better when bundled and time-bound |

| Conversational evidence | Answered qualifying question, confirmed timeframe, asked for next step | Very high | Direct buyer language, context-rich, hard to misinterpret |

If your motion is LinkedIn-first, conversational evidence matters even more because it captures intent that never hits your website analytics.

Step 3: Make the score explainable, not just visible

Putting a numeric score in a CRM field is not “explainable.” Sales trust comes from seeing why the score is high.

A sales-trusted score should ship with:

- Top drivers (3 max): the three reasons this lead is scored the way it is.

- Recency context: “Intent spike in last 7 days” beats “Intent: 82.”

- Conversation proof: a snippet, summary, or checked criteria from the actual thread.

- Clear next action: book, qualify deeper, nurture, or disqualify.

The most practical format: score bands with reasons

Instead of obsessing over 0 to 100 precision, create bands that map to decisions.

| Score band | Meaning | SLA + owner | Next action | What must be captured |

|---|---|---|---|---|

| A (Priority) | Likely qualified | Work today | Move to meeting path | Evidence of need + timeframe (or your chosen criteria) |

| B (Active) | Promising but incomplete | Work within 48 hours | Ask 1 to 2 qualifying questions | Missing one key criterion |

| C (Nurture) | Not ready | Weekly / sequence | Provide value, monitor triggers | Clear reason for nurture |

| D (Disqualify) | Wrong fit or explicit no | Close out | Disqualify, route elsewhere | Disqual reason selected |

This is where scoring becomes something reps can run in the real world.

Step 4: Calibrate for precision first, then expand coverage

Most teams try to score every lead and then wonder why trust collapses. Start by being right on a smaller set.

In scoring terms, early trust is usually built by optimizing for:

- Precision: when you say it is qualified, it usually is.

Later, once adoption is strong, you can improve:

- Recall: catching more of the leads that would have qualified.

If you want a clean explanation of precision vs recall, see the scikit-learn documentation.

A practical calibration workflow (that sales will respect)

Run a 2 to 4 week calibration sprint:

- Pull the last 60 to 200 leads that reached the outcome you care about (for example, AE-accepted meeting).

- Compare them to a similar number of leads that did not.

- Identify which signals truly separate the two groups.

- Set conservative thresholds for your A band.

- Put B and C bands behind clear next actions.

Then do the part most teams skip: show sales the error analysis.

- 5 false positives: why were they wrong, what signal tricked us?

- 5 false negatives: what did we miss, what evidence existed but was not captured?

When reps see you treating scoring like a product (with iteration and postmortems), trust rises fast.

Step 5: Build in negative scoring and decay (so the system feels honest)

Sales distrusts scores that only go up. Real buyers go cold, go silent, or say “not this year.” Your scoring must reflect that.

Two mechanisms fix this:

Negative scoring

Examples:

- Student, recruiter, vendor, competitor

- Explicit “no budget,” “no project,” “we just renewed,” “stop messaging me”

- Wrong region, wrong segment, outside ICP

Time decay

Intent without recency is a trap. You can keep this simple:

- Reduce intent points after 7 to 14 days without activity.

- Heavily reduce after 30 days.

The goal is not mathematical perfection. It is to make the score behave the way a great rep would behave.

Step 6: Operationalize the score with routing, SLAs, and a handoff packet

Scoring earns trust when it changes outcomes. That requires workflow.

At minimum, operationalize:

Routing rules

- A band: route to the right owner immediately (by segment, territory, or account).

- B band: route to SDR queue with a clear “complete qualification” task.

- C band: route to nurture (marketing or SDR sequence), keep it measurable.

- D band: close out with reason.

SLAs

Speed still matters, but it must be tied to quality:

- A: same day response

- B: within 48 hours

- C: no SLA, but weekly touches or trigger-based reactivation

The handoff packet (what sales actually needs)

A sales-trusted handoff is short:

- Why now (trigger or intent)

- Why us (value hypothesis)

- What we learned (1 to 3 facts)

- What to do next (recommended CTA)

If you want an end-to-end process view, Kakiyo’s lead qualification process guide is a helpful companion, this article is specifically about how to make scoring believable and adopted.

Step 7: Use conversation signals to upgrade “intent” into “evidence”

For outbound and LinkedIn-first motions, the highest-trust signals often live inside the thread:

- Did they answer a qualifying question?

- Did they confirm a problem worth solving?

- Did they indicate timing (this quarter vs someday)?

- Did they ask about pricing, implementation, alternatives, or stakeholders?

This is where conversational systems outperform form fills and generic engagement scoring.

How Kakiyo fits (without pretending the tool is your strategy)

Kakiyo is designed for this exact gap: converting LinkedIn conversations into qualified leads with proof, not just activity.

Based on Kakiyo’s product capabilities, teams use it to:

- Run autonomous LinkedIn conversations that stay personalized at scale

- Apply an intelligent scoring system during the conversation, not weeks later

- Use industry-specific templates, custom prompts, and A/B prompt testing to improve qualification quality

- Maintain control with conversation override and review outcomes in a centralized real-time dashboard with analytics and reporting

In other words, instead of scoring based only on passive clicks, you can score based on what the buyer actually said, and route accordingly.

Step 8: Protect trust with governance (the part RevOps cannot skip)

Even a good score will lose credibility if the inputs are messy or the system is easy to manipulate.

Governance checklist:

- Field hygiene: standardize lifecycle stages and disqual reasons.

- Deduplication: one buyer, one record, one score.

- Outcome integrity: if the label is “qualified,” ensure it is consistently applied.

- Change control: version your scoring rules and document changes.

- Paired metrics: track volume and quality together (for example, meetings booked and AE acceptance).

If your team uses AI anywhere in the process, include guardrails: who can change prompts, who approves new templates, and how you monitor unintended shifts.

Frequently Asked Questions

What makes lead scoring “sales-trusted” versus “marketing-owned”? Sales-trusted scoring is built on outcomes sales agrees with (like AE-accepted meetings), includes clear evidence and reasons for the score, and changes rep workflow through routing and SLAs. Marketing can still own operations, but sales must co-own definitions and feedback.

Which metrics prove the scoring is working? Start with AE acceptance rate by score band, meeting held rate by band, and meeting to opportunity conversion by band. If you have enough volume, also track precision and recall for your A band.

How often should we recalibrate lead scoring? Recalibrate lightly monthly (threshold tuning and driver review) and do a deeper review quarterly, or any time your ICP, messaging, or channels shift materially.

Should we optimize for more qualified leads or higher lead quality first? Quality first. Early trust is built by being right on fewer leads (higher precision). Once sales adopts the score, expand coverage to capture more qualified leads without flooding reps.

Can AI lead scoring be trusted? Yes, if it is explainable and audited. AI can help summarize conversations, identify intent, and apply consistent qualification, but trust still requires visibility into the drivers, clean outcome labels, and human governance.

Turn scoring into qualified meetings with conversation-led proof

If your scoring system is generating debate instead of pipeline, the fix is usually not another dashboard. It is capturing better evidence, faster, in the channel where real intent shows up.

Kakiyo helps teams generate more qualified leads by managing personalized LinkedIn conversations from first touch to qualification to meeting booking, with scoring, testing, and human override built in.

Explore Kakiyo at kakiyo.com and see how conversation-led scoring can make lead quality obvious, not arguable.