AI Sales Forecasting: Methods, Models, and Accuracy

Practical guide to building explainable, timely, and defensible AI-driven sales forecasts — covering the right questions, data schema, methods, accuracy metrics, and how LinkedIn conversation signals improve pipeline predictability.

Revenue leaders do not just need a number, they need a forecast that is explainable, timely, and defensible in the boardroom. AI sales forecasting can deliver that, if it is grounded in the right questions, data, models, and accuracy practices. This guide breaks down practical methods, how to choose among them, ways to measure accuracy, and how conversation level signals from LinkedIn can help you get ahead of pipeline swings.

Start with the forecast questions, not the model

Great forecasts begin with scope. Define the specific business question and horizon before you pick a method.

- In quarter commit: Which opportunities will close this quarter and for how much, with a confidence range?

- Next quarter outlook: What bookings should we expect by segment, region, or product?

- Annual plan tracking: Are we pacing to plan and how should we reallocate capacity?

- Coverage and risk: Do we have enough pipeline and where are the gaps by stage and source?

Each question implies different data granularity and modeling choices. In quarter commit is usually opportunity level, while next quarter outlook and plan tracking often use aggregated time series at a segment level.

The data to make AI sales forecasting work

You do not need a data lake to get started, but you do need consistent fields and clear definitions. Aim for a minimal, well defined schema, then enrich with leading indicators.

| Field | Level | Why it matters |

|---|---|---|

| Opportunity ID, account, owner | Opportunity | Deduplication and attribution |

| Amount, product, region, segment | Opportunity | Forecasting drivers and hierarchy |

| Stage, stage age, created date | Opportunity | Win propensity and slippage risk |

| Expected close date, last activity date | Opportunity | Recency and time to close |

| Source, campaign, channel | Opportunity | Mix shifts and seasonality |

| Meetings held, next meeting date | Opportunity | Momentum signals |

| Win or loss, close date | Opportunity | Labels for supervised learning |

| Bookings by week or month | Aggregate | Time series baseline |

| Rep capacity, ramp, quota | Team | Coverage models and plan checks |

| LinkedIn conversation signals | Lead or contact | Early intent and velocity |

If your team runs LinkedIn at scale, capture leading indicators such as acceptance rate, reply rate, qualified conversation rate, and meetings booked. If you use Kakiyo, its intelligent scoring system and advanced analytics can provide conversation level signals that improve opportunity quality features without manual logging.

Methods and models for AI sales forecasting

No single model wins everywhere. Use the method that matches your question, your data volume, and the level you need to explain.

| Method | Input granularity | Strengths | Watchouts | Best for |

|---|---|---|---|---|

| Probability weighted pipeline (rules based) | Opportunity | Transparent, quick to operationalize | Stage probabilities often biased, poor on timing | First baseline and rep coaching |

| Logistic or gradient boosted classification for win by date | Opportunity | Learns from patterns, good reason codes with SHAP | Needs clean labels and balanced classes | In quarter commit and next 60 to 90 day close prediction |

| Regression on amount with zero inflation handling | Opportunity | Predicts expected value, captures partial stage value | Sensitive to outliers, needs careful loss function | Amount prediction for late stage deals |

| Survival or time to event models | Opportunity | Predicts likelihood to close by time, handles censoring | Requires duration features and careful interpretation | Slippage risk and close date timing |

| Exponential smoothing or ARIMA family | Aggregate time series | Strong on seasonality and trend with few parameters | Needs stable patterns, limited exogenous drivers | Segment or region bookings by month |

| Gradient boosted trees or elastic nets with exogenous features | Aggregate time series | Uses campaigns, capacity, and macro features | Risk of leakage, needs backtesting discipline | Outlook that blends drivers with history |

| Deep learning for sequences, for example LSTM or Temporal Fusion Transformer | Either | Captures complex temporal effects and interactions | Data hungry, harder to explain to field | Large catalogs or multi signal series |

| Hierarchical reconciliation | Aggregate across levels | Keeps top and bottom forecasts consistent | Adds process complexity | Region to territory roll ups |

| Ensembles and model blending | Either | Usually more accurate and robust | Requires governance and monitoring | Final operational forecast |

If you want a rigorous primer on time series methods, see Forecasting: Principles and Practice by Hyndman and Athanasopoulos, a widely used free text that emphasizes reproducible evaluation and simple baselines. The M4 and M5 forecasting competitions also show why combinations of methods and disciplined backtesting often outperform a single silver bullet.

- Forecasting: Principles and Practice by Hyndman and Athanasopoulos: https://otexts.com/fpp3/

- M4 Competition overview: https://www.mcompetitions.unic.ac.cy/the-m4-competition/

- M5 Competition overview: https://www.mcompetitions.unic.ac.cy/the-m5-competition/

Choose by question, then triangulate

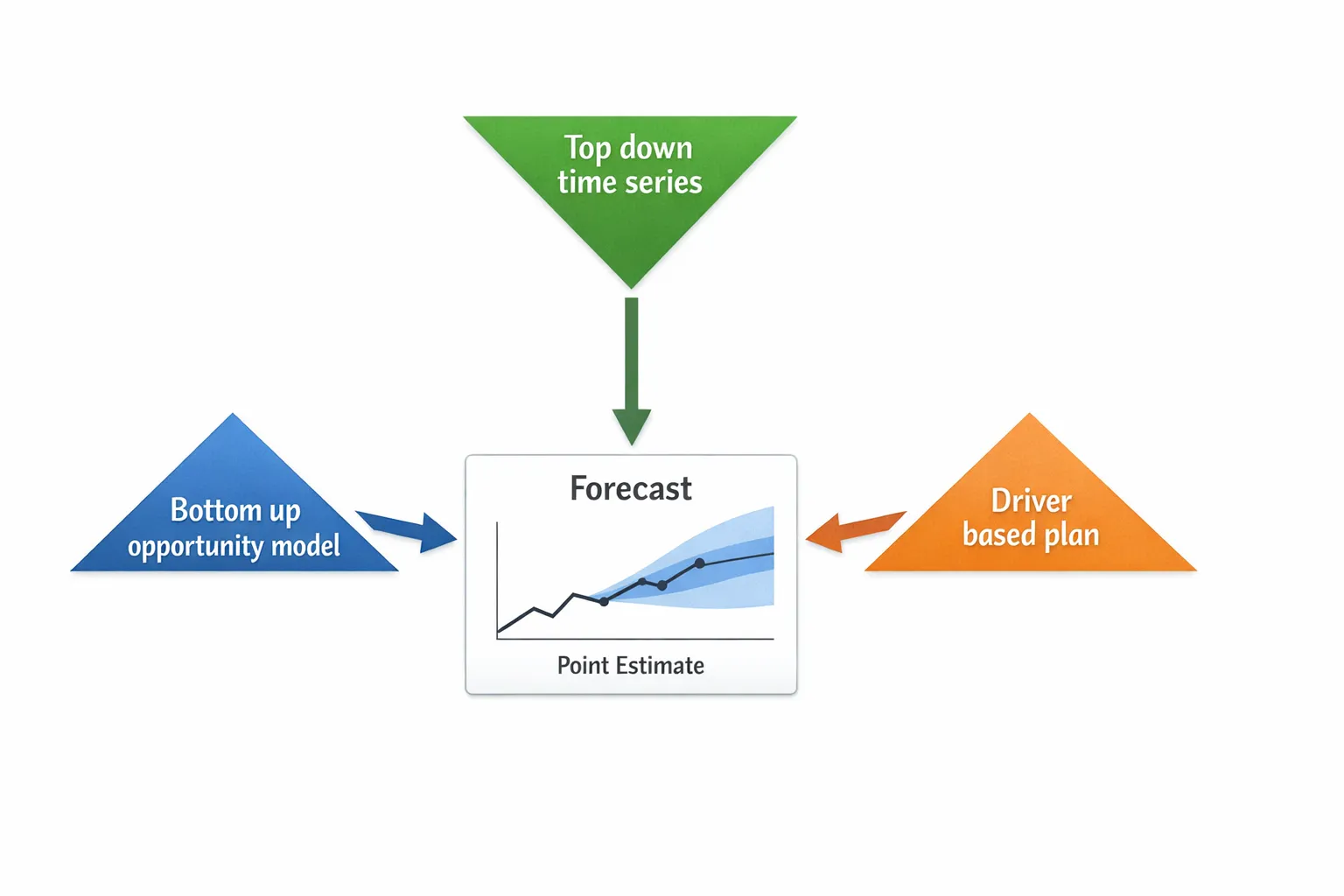

A reliable operating cadence uses multiple views, then reconciles them.

- Bottom up opportunity view, classification or survival models estimate the probability that each deal will close inside the window. Multiply by amount to get expected value. Summarize by segment and owner.

- Top down time series view, an ETS or gradient boosted model forecasts bookings by month using history plus exogenous drivers such as capacity and campaign calendars.

- Driver based plan view, combine coverage rules, current conversion rates, and deal cycle times to sanity check the first two views.

Blend the three into a single number with documented weighting and a prediction interval. Keep the weights stable for at least a quarter and manage changes with a champion versus challenger process.

Measuring accuracy the right way

Accuracy is not a single metric. Use metrics that match the decision you are making and evaluate on a rolling origin backtest rather than a single random split.

- Opportunity level classification, evaluate with area under the precision recall curve for rare wins, plus Brier score and calibration curves to test whether a 0.7 prediction really closes 70 percent of the time. The scikit learn documentation on probability calibration is a practical reference: https://scikit-learn.org/stable/modules/calibration.html

- Amount forecasts, use MAE or WAPE to avoid percentage blowups on small deals. sMAPE can help for scale invariant comparisons across segments.

- Time series at aggregate level, use MAPE or sMAPE when values are not near zero, and evaluate coverage of your prediction intervals, for example 80 percent intervals should contain the actual 80 percent of the time.

| Metric | Use when | Why |

|---|---|---|

| MAE | Dollar error matters more than relative error | Stable and interpretable |

| WAPE | Mixed deal sizes across segments | Weighted by volume, good for sales aggregates |

| sMAPE | Comparing across series with different scales | Symmetric treatment of over and under forecasts |

| PR AUC | Few positive outcomes, for example wins | Focuses on precision and recall rather than negatives |

| Brier score and calibration | You act on probability thresholds | Tests probability quality, not just ranking |

Adopt rolling origin backtesting. Train on months 1 to k, validate on month k plus 1, then roll forward. This mirrors reality and discourages leakage from future information.

Four levers that usually lift accuracy

- Better labels, define win, close date, and when an opportunity is considered dead. Inconsistent loss marking can hurt models more than missing features.

- Recency and momentum features, stage age, days since last meaningful activity, meetings completed and next meeting set are often predictive. For LinkedIn led motions, acceptance to reply conversion and qualified conversation rate are powerful early signals.

- Calibration and reason codes, use isotonic or Platt calibration to align predicted probabilities with reality. Expose top features as reason codes to reps and managers for trust and coaching.

- Ensembles and stability, simple averages of two or three strong but different models often beat any one model. Stability matters more than the last decimal of accuracy.

A practical 30 day build plan

- Define scope and targets, pick one segment and one horizon, for example in quarter commit for mid market new business.

- Assemble the dataset, export 12 to 24 months of opportunities with required fields and a monthly bookings series. Add conversation level indicators if available.

- Baselines, create two baselines, a simple probability weighted pipeline using static stage probabilities, and a naive time series that repeats last month or last year same month.

- Train first models, for commit, start with gradient boosted classification for win inside the window and a survival model for days to close. For time series, fit ETS and a tree based model with exogenous drivers.

- Backtest and pick, use rolling origin evaluation. Keep one champion per view plus a simple average ensemble.

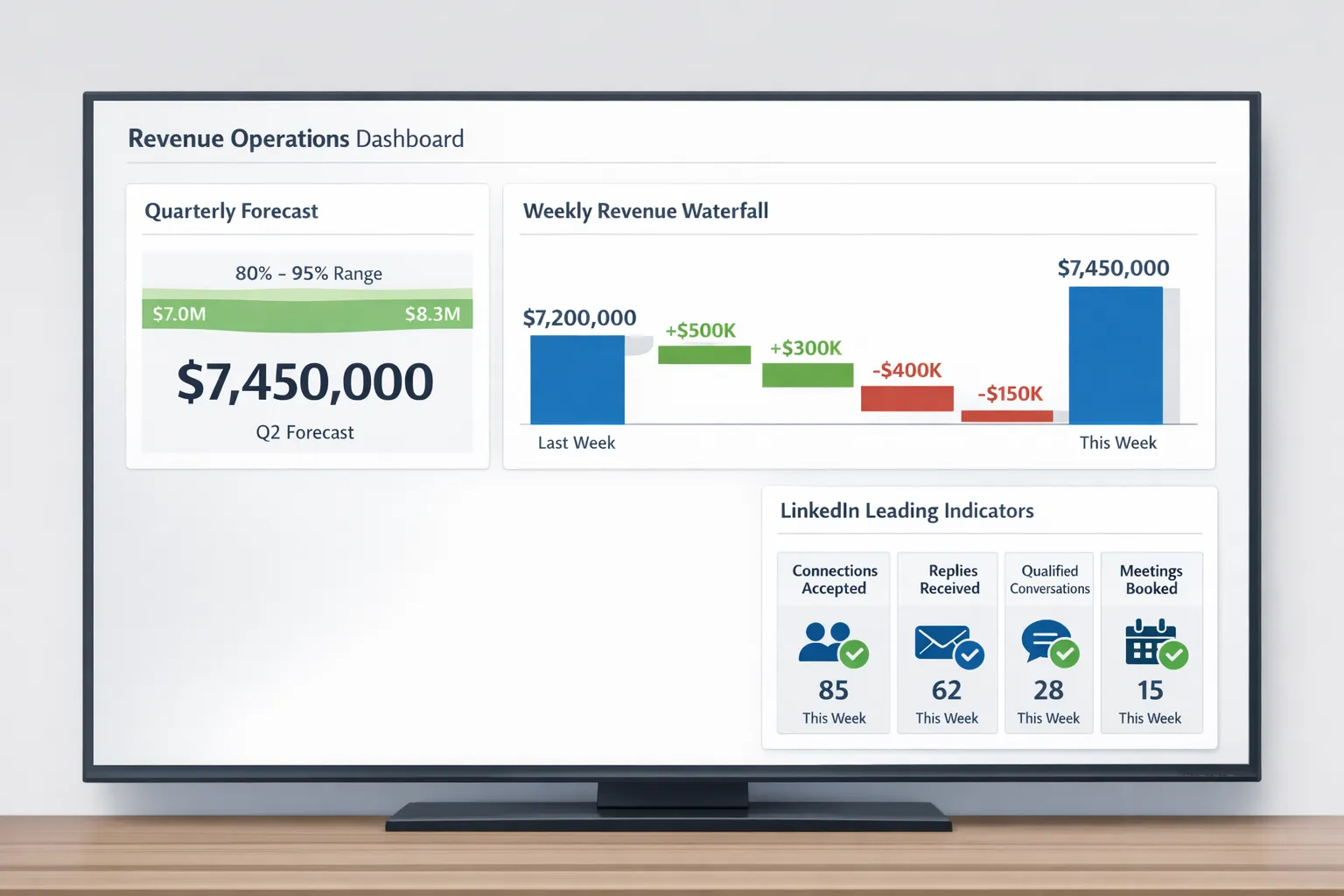

- Publish and explain, ship a lightweight dashboard that shows point estimate, interval, and reason codes. Document the few rules that will not be automated yet, for example executive judgment on a top deal.

- Adopt weekly, run a 30 minute forecast call that compares last week versus this week deltas by reason, new meetings, stage progression, and conversation signals. Freeze model weights until the quarter ends.

Where LinkedIn conversations improve the forecast

Early signals are the difference between guessing and knowing. For social led pipeline, the leading indicators below often move weeks before bookings do.

- Acceptance and reply rates rise when targeting and messaging resonate, which usually expands top of funnel volume.

- Qualified conversation rate and meeting booked rate are stronger predictors of conversion than vanity metrics like connection count.

- Conversation sentiment and objection themes help forecast slippage and loss reasons, especially in late stage.

Kakiyo autonomously manages personalized LinkedIn conversations, qualifies prospects, and books meetings at scale. Its intelligent scoring and advanced analytics create structured, real time signals that feed your forecast features. Because Kakiyo also supports A or B prompt testing, you can connect improvements in conversion directly to uplift in forecasted bookings. With centralized dashboards and conversation override control, sales leaders keep humans in the loop while getting more timely data than manual logging can provide.

If you are formalizing a social led forecast, codify these four metrics in your dataset and dashboards: acceptance, reply, qualified conversation, and meetings booked. Track their 2 to 4 week leading relationship to pipeline created and stage progression. For a practical LinkedIn workflow that maps cleanly to these signals, see our LinkedIn prospecting playbook: https://kakiyo.com/blog/linkedin-prospecting-playbook-from-first-touch-to-demo

Governance, trust, and drift

- Explanations, expose top features per deal and per segment. Keep the list tight and human readable.

- Overrides with accountability, allow manager overrides with a comment and auto track the delta to model output. Review overrides each month.

- Champion versus challenger, hold current models steady through a quarter. Test challengers offline and promote only after they beat champions on a matching backtest.

- Drift monitoring, watch three things, input drift, for example stage mix or average deal size, calibration drift, and performance drift. Recalibrate probabilities more often than you retrain models.

- Compliance and privacy, keep personal data limited to what is needed for forecasting. Aggregate or hash where you can.

Benchmarks and expectations

Accuracy varies by cycle length, deal size variance, and data volume. Useful targets to consider as you mature the program:

- Aggregate quarter forecast at the segment level, WAPE under 20 percent and prediction intervals that capture actuals at the stated rate.

- In quarter commit at the opportunity level, calibrated probabilities that pass a reliability test, for example 0.7 buckets close near 70 percent over time.

- Time to close, survival models that reduce late stage slippage by identifying risk 2 weeks earlier than rules alone.

These are not rules, they are calibration points to help you set goals and communicate progress.

Frequently Asked Questions

What is AI sales forecasting? AI sales forecasting uses statistical and machine learning methods to predict bookings or revenue, often combining opportunity level models with aggregate time series and producing probability based estimates with confidence bands.

Which model should I start with? Start with a simple probability weighted pipeline baseline and a classical time series baseline. Then add a gradient boosted classifier for in quarter wins and an ETS model for monthly bookings. Blend them and compare against baselines.

How do I deal with long enterprise cycles? Use survival models to estimate time to close, rely more on driver based and coverage views, and lengthen your backtest windows. Early conversation and meeting signals become more valuable when cycles are long.

How do I make probabilities trustworthy? Calibrate them. Use isotonic or Platt scaling and monitor Brier score and reliability diagrams quarterly. See scikit learn’s calibration guide: https://scikit-learn.org/stable/modules/calibration.html

Should I use deep learning? Only if you have the volume and engineering capacity. Many teams find ensembles of simpler models plus disciplined backtesting match or beat deep nets for B2B sales.

What is the role of prediction intervals? Point estimates hide risk. Intervals quantify the plausible range, which helps leaders plan cash, capacity, and plan based commits.

How do LinkedIn signals fit in? Treat acceptance, reply, qualified conversation, and meetings booked as leading indicators. They improve early detection of pipeline changes and can be engineered into both opportunity level and aggregate models.

Where can I learn the fundamentals of forecasting? Forecasting: Principles and Practice is an excellent applied resource: https://otexts.com/fpp3/. Salesforce also provides a practical overview of sales forecasting concepts: https://www.salesforce.com/solutions/sales/resources/what-is-sales-forecasting/

Turn conversations into a forecast advantage

If you want earlier, more reliable signals, instrument the conversations that create pipeline. Kakiyo manages personalized LinkedIn conversations, qualifies prospects, and books meetings at scale, then surfaces intelligent scores and analytics that you can feed into your forecast features. See how conversation level data can improve both pipeline quality and forecast accuracy. Visit Kakiyo: https://www.kakiyo.com or explore our guide to AI sales automation for the full prospecting to qualification pipeline: https://kakiyo.com/blog/ai-sales-automation-from-prospecting-to-qualification