AI Sales Metrics: What to Track Weekly

Practical weekly scorecard and operating rhythm for AI-assisted outbound (LinkedIn): what to track, how to slice metrics, and actions to take when metrics move.

If you are running AI-assisted outbound and still managing the team by “meetings booked,” you are flying blind.

AI changes the pace and shape of the funnel. It can create more conversations than humans can manually triage, it can vary message quality across prompt versions, and it can quietly drift over time. That means your weekly metric review needs to do two things at once:

- Prove you are creating qualified pipeline, not just activity.

- Catch quality and governance issues early, before they show up as bad meetings and rejected opportunities.

Below is a practical weekly scorecard for AI sales motions (especially LinkedIn conversation-led outreach), plus how to interpret each metric and what to do when it moves.

What “weekly” metrics are actually for (in AI sales)

Weekly tracking is not forecasting, and it is not performance theater. It is an operating system.

A good weekly AI sales metric set should:

- Detect funnel leaks early (before the month is over).

- Separate throughput from quality (so you do not optimize vanity).

- Enable controlled experimentation (prompts, ICP slices, offers).

- Maintain safety and brand standards (policy, tone, escalation).

If your scorecard cannot tell you “what changed, where, and why,” it is not a scorecard, it is a dashboard.

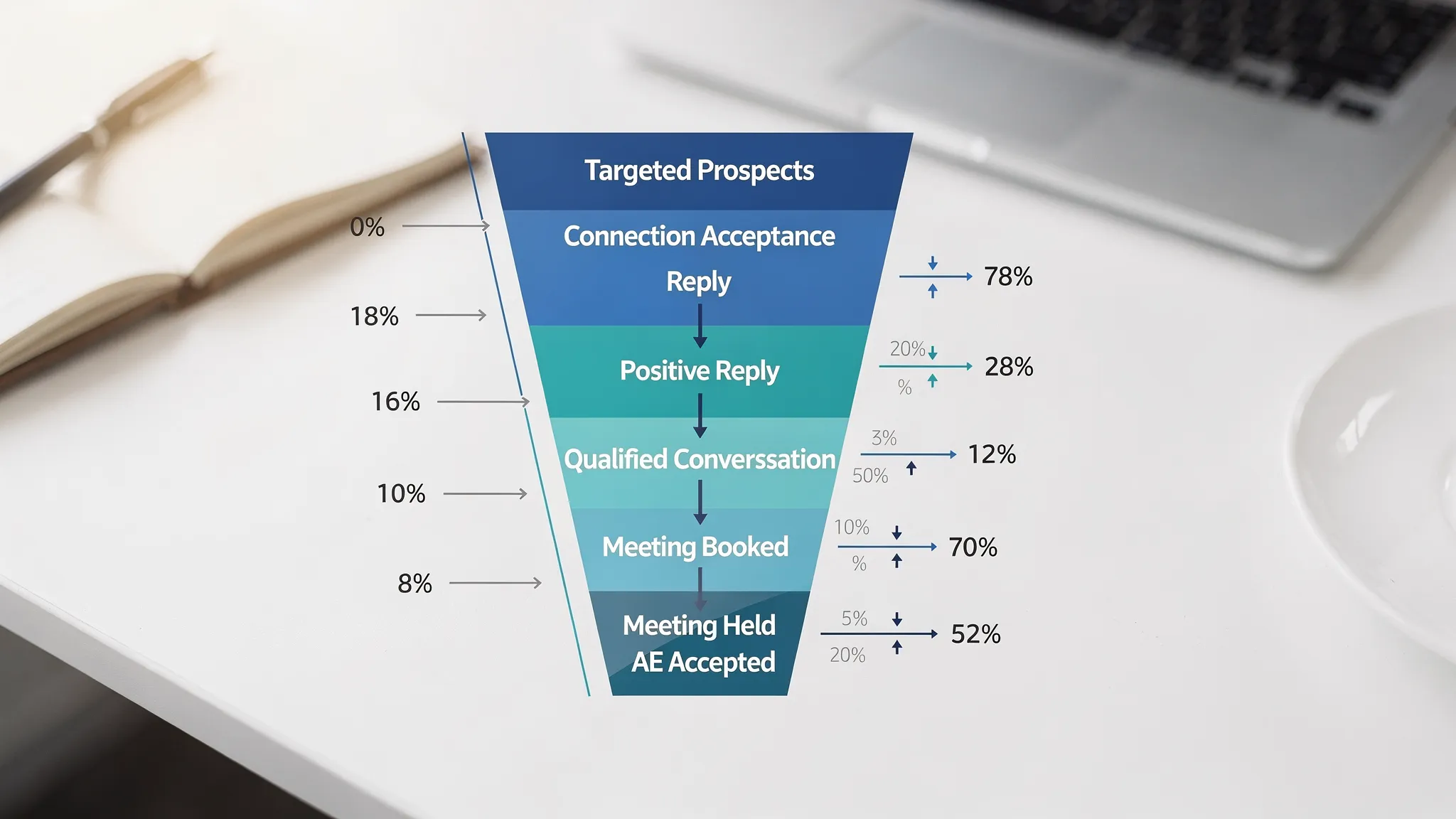

Start with a simple AI outbound funnel (so metrics map to stages)

For AI-driven LinkedIn outreach, the cleanest funnel is still micro-conversion based:

- Targeted prospects (ICP list quality)

- Connection acceptance

- First reply

- Positive reply (or “engaged reply”)

- Qualified conversation

- Meeting booked

- Meeting held

- AE accepted (or opportunity created)

You do not need to track every stage daily. Weekly is about stability and diagnosis.

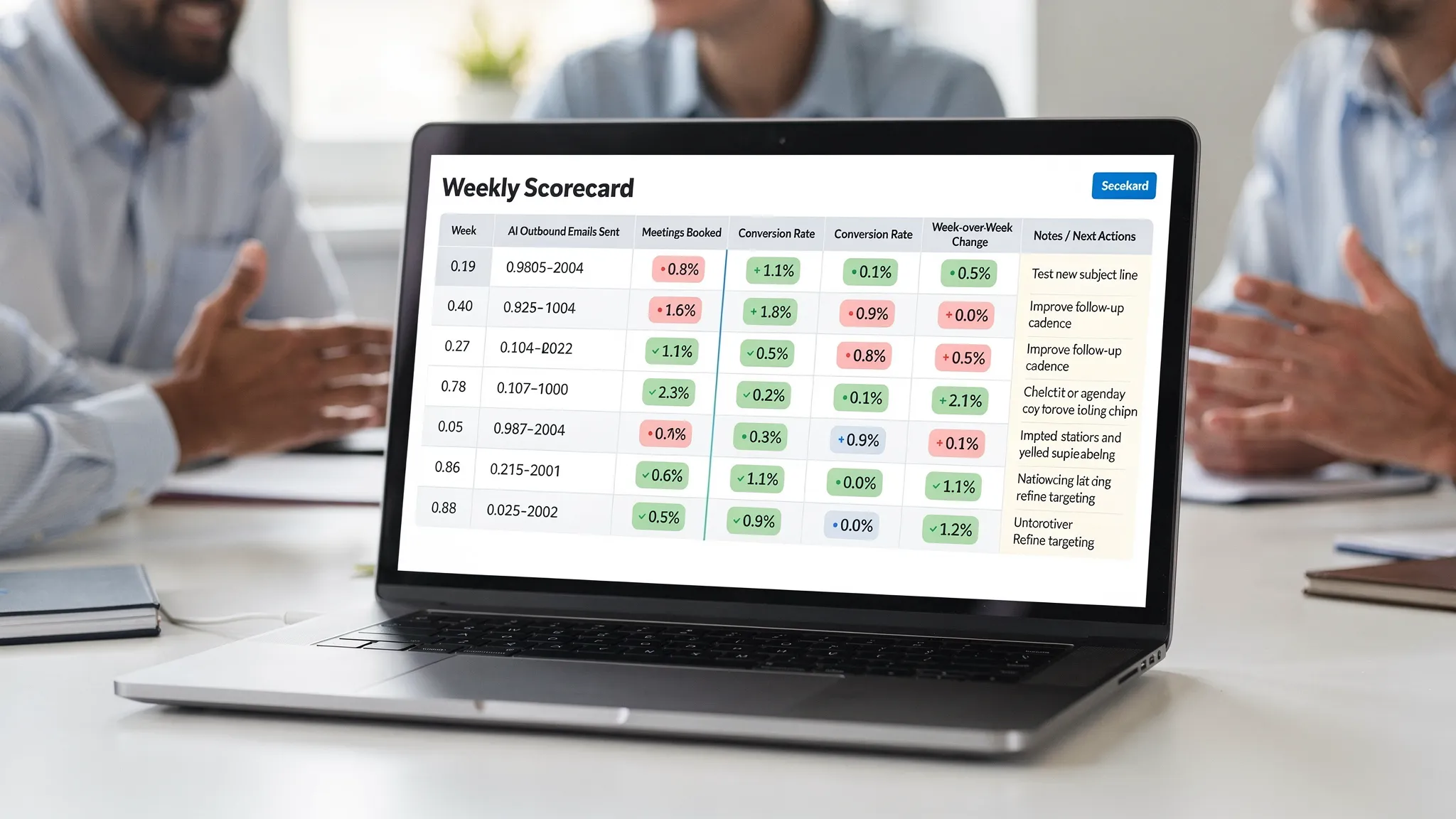

The weekly AI sales metrics scorecard (what to track)

Use one scorecard for the team, and slice it by segment (ICP tier, industry, region, persona, campaign, prompt version). If you only look at blended averages, you will miss where the AI is winning or failing.

Core weekly metrics (with definitions and why they matter)

| Metric | What it measures | How to calculate (weekly) | Why it matters weekly |

|---|---|---|---|

| ICP coverage (by tier) | Whether outreach is going to the right people | Prospects messaged in ICP ÷ total prospects messaged | Prevents “scale to the wrong audience,” a common AI failure mode |

| New conversations started | Top-of-funnel throughput that is closer to reality than “messages sent” | Unique prospects with a two-way thread started | Shows whether you are creating actual surface area for qualification |

| Connection acceptance rate | Targeting relevance + profile credibility | Accepted connection requests ÷ requests sent | Drops often mean list quality issues, not copy issues |

| Reply rate (overall) | Message relevance and timing | Prospects who replied ÷ prospects contacted | First signal that messaging is earning attention |

| Positive reply rate | Whether replies contain opportunity, not just noise | Positive/meaningful replies ÷ total prospects contacted (or ÷ total replies) | Paired with reply rate to avoid optimizing for low-quality replies |

| Qualified conversation rate | In-thread qualification effectiveness | Qualified conversations ÷ total conversations started | The most important “AI is doing real SDR work” indicator |

| Meeting booked rate (from qualified) | Your CTA and scheduling flow quality | Meetings booked ÷ qualified conversations | Separates “good qualification” from “can we convert intent into a calendar event” |

| Meeting held rate | Real buyer intent and expectation-setting | Meetings held ÷ meetings booked | Catch “AI booked it, but it was weak” patterns fast |

| AE acceptance rate (or opp conversion) | Downstream quality | AE-accepted meetings ÷ meetings held (or opportunities created ÷ meetings held) | Prevents meeting volume from masking bad fit |

| Median time to first response (by your side) | Speed and momentum | Median minutes/hours to respond after prospect replies (human or AI) | Long delays kill conversion in conversational channels |

| AI override / escalation rate | Where humans must step in | Threads escalated or overridden ÷ total active threads | Rising rate can signal prompt issues, new objections, or governance gaps |

| Evidence capture rate | Whether your CRM notes and fields are audit-ready | Qualified threads with captured fit/intent evidence ÷ total qualified threads | Without evidence, “qualified” becomes opinion and alignment breaks |

A note on terminology: define “positive reply” and “qualified conversation” in writing. If you do not, the team will relabel outcomes to match goals.

If you need a defensible qualification method, it helps to standardize on fit, intent, and evidence (conversation proof). Kakiyo has a solid foundation on building repeatable qualification in its guide to lead qualification.

AI-specific metrics most teams forget (but should review weekly)

Traditional SDR teams can sometimes wait a month to notice a problem. AI-driven outreach creates enough volume that small issues compound quickly. These are the AI-native checks that keep quality stable.

Prompt and experiment health

If you are using AI to run conversations, your “copy” is not one message. It is a system of prompts, templates, and rules.

Track weekly:

- Prompt version performance: qualified conversation rate and meeting held rate by prompt version.

- Prompt coverage: percent of active threads running the latest approved prompt.

- A/B test status: tests running, paused, or concluded, and what decision was made.

This is where many teams slip. They run tests, see a lift in replies, and ship it. The correct success metric is usually later in the funnel (qualified conversations, held meetings, AE acceptance).

Kakiyo supports customizable prompt creation and A/B prompt testing, which is exactly the kind of workflow you want when you treat outbound as an experiment system, not a “set it and forget it” sequence.

Autonomy and safety

Even if the AI is doing most of the work, you still need visibility into where it is safe to let it run.

Track weekly:

- Autonomy rate: percent of threads that progressed from first touch to qualification without manual intervention.

- Escalation reasons (top 3): pricing request, competitor comparison, security/compliance, “not me,” “not now,” etc.

- Policy or tone violations (internal): any messages flagged by your team for being off-brand or risky.

This is not bureaucracy. It is how you prevent one “creative” prompt change from turning into a brand problem.

For a broader guardrail approach, it is worth aligning with a safety-first automation mindset like the one in automated LinkedIn outreach.

How to read the scorecard (diagnosis, not vibes)

Weekly metrics are only useful if they tell you where to look next. A simple diagnostic map helps managers coach faster and prevents random changes.

A quick “if this drops, check this” table

| If this metric drops… | Check these likely causes first | Typical fix |

|---|---|---|

| Connection acceptance rate | ICP drift, weaker targeting signals, profile credibility, too many requests too fast | Tighten list filters, refresh ICP tiers, adjust first-touch framing |

| Reply rate | Message relevance, timing, personalization inputs, offer clarity | Rewrite value hypothesis, improve trigger selection, test a shorter opener |

| Positive reply rate | You are earning replies from the wrong audience, or asking a weak question | Narrow ICP slice, change the question to a higher-signal micro-yes |

| Qualified conversation rate | Qualification questions too early or too vague, not capturing evidence | Update qualification flow, add proof prompts, standardize definitions |

| Meeting booked rate (from qualified) | CTA friction, scheduling mechanics, unclear next step | Make the CTA simpler, offer 2 options, improve meeting framing |

| Meeting held rate | Over-promising, weak expectation setting, wrong persona, calendar fatigue | Tighten pre-meeting confirmation, improve agenda, qualify timeline better |

| AE acceptance rate | Bad fit, shallow need discovery, missing buying group info | Improve evidence capture, enforce minimum qualification proof |

| Override/escalation rate rises | New objection patterns, prompt regression, new segment rollout | Review thread examples, adjust prompts, update escalation rules |

This is the same logic used in good conversational selling systems: diagnose the stage, then change one variable at a time.

Weekly slices that matter (so you do not get fooled by averages)

Do not just track the scorecard overall. Every week, slice at least one way.

High-value slices for AI sales motions:

- By persona (Champion vs Economic Buyer vs Technical evaluator)

- By ICP tier (Tier 1 named accounts vs Tier 3 broad)

- By industry (language and pains vary significantly)

- By prompt version (this is your “release tracking”)

- By source list (Sales Nav list A vs enrichment vendor list B)

A common weekly pattern: overall reply rate looks stable, but Tier 1 acceptance and positive reply rates drop. That is often a signal that the AI is scaling into less relevant Tier 1 accounts (or the prompt is too generic for high-stakes buyers).

If you want a deeper LinkedIn-first workflow that naturally maps to these slices, see LinkedIn prospecting playbook: from first touch to demo.

What to review weekly vs monthly (so your meetings stay short)

Not everything belongs in a weekly meeting. Weekly is for leading indicators and operational health. Monthly is for efficiency and pipeline outcomes.

Here is a practical split:

| Cadence | Track | Reason |

|---|---|---|

| Weekly | Acceptance, reply, positive reply, qualified conversation rate, booked and held meetings, AE acceptance, time-to-response, overrides/escalations | These change fast and are directly coachable |

| Monthly | Pipeline sourced, win rate by source, CAC or cost per meeting, multi-touch attribution, segment expansion decisions | Needs larger samples and depends on sales cycle length |

If you try to do all of this weekly, the team will optimize for what they can move quickly (often activity) instead of what matters.

A lightweight weekly operating rhythm (30 to 45 minutes)

A weekly AI sales metrics review should feel like a product growth meeting: ship, measure, learn.

A simple structure that works:

Scorecard first (10 minutes)

Look at the 12 metrics above. Do not debate fixes yet. Mark what changed versus last week and versus a 4-week rolling baseline.

One funnel leak (10 minutes)

Pick one stage where conversion degraded (for example, qualified conversations to meetings held). Pull 5 to 10 real threads as evidence.

One experiment decision (10 minutes)

Decide one of:

- Ship a winning prompt version

- Pause a test

- Start a new test with a clear hypothesis

Keep it to one decision. AI makes it tempting to run too many experiments without learning.

One governance check (5 minutes)

Review escalations, overrides, and any flagged messages. Confirm guardrails still match reality.

Assign one change (5 minutes)

One owner, one change, one expected metric movement.

This rhythm pairs well with systems that provide centralized conversation visibility and analytics. Kakiyo’s real-time dashboard and advanced analytics & reporting are designed for exactly this kind of weekly review.

A practical note on “efficiency” metrics (track them, but do not let them drive)

AI sales programs often try to justify themselves with efficiency alone (messages per hour, conversations per SDR). Those are useful, but only when paired with outcome quality.

If you want one weekly efficiency metric that does not create bad behavior, use:

Cost per qualified conversation (or cost per held meeting), calculated consistently.

What matters is not that the AI created 3x more threads. What matters is whether it created more qualified conversations that turned into held meetings accepted by sales.

Where Kakiyo fits (without changing your entire stack)

If your team sells on LinkedIn, the main operational challenge is that the thread is the work. Metrics are only as good as your ability to:

- Run many 1:1 conversations without losing quality

- Standardize qualification signals

- Test prompts in a controlled way

- Escalate to humans when needed

- Report outcomes by prompt, segment, and stage

Kakiyo is built around that workflow, using autonomous LinkedIn conversations that progress from first touch to AI-driven qualification and meeting booking, with prompt testing, industry templates, an intelligent scoring system, and override controls so reps can step in when it matters.

If you are already running AI on LinkedIn and want a tighter measurement loop, it is also worth comparing conversation-led systems to step-based sequencers in Sales AI tools vs legacy sequencers.

The simplest way to start this week

If you do nothing else, do this:

- Create a one-page weekly scorecard with the 12 metrics above.

- Define “positive reply” and “qualified conversation” in writing.

- Review the scorecard weekly, and make exactly one change per week based on thread evidence.

That is how AI sales becomes predictable. Not by adding more tools, but by treating outreach like a measurable system that learns every week.